A student has made a tool, which they call PrismX, which scans for users writing certain keywords on Reddit and other social media networks, assigns those users a so-called “radical score,” and can then deploy an AI-powered bot to automatically engage with the users in conversation in an attempt to de-radicalize them.

The news highlights some of the continuing experiments people are running on Reddit which can involve running AI against unsuspecting human users of the platform, and shows the deployment of AI to Reddit more broadly. This new tool comes after a group of researchers from the University of Zurich ran a massive, unauthorized AI persuasion experiment on Reddit users, angering not just those users and subreddit moderators but Reddit itself too.

💡Do you know about any other AI products targeting Reddit? I would love to hear from you. Using a non-work device, you can message me securely on Signal at joseph.404 or send me an email at joseph@404media.co.

“I’m just a kid in college, if I can do this, can you imagine the scale and power of the tools that may be used by rogue actors?” Sairaj Balaji, a computer science student at SRMIST Chennai, India, told 404 Media in an online chat.

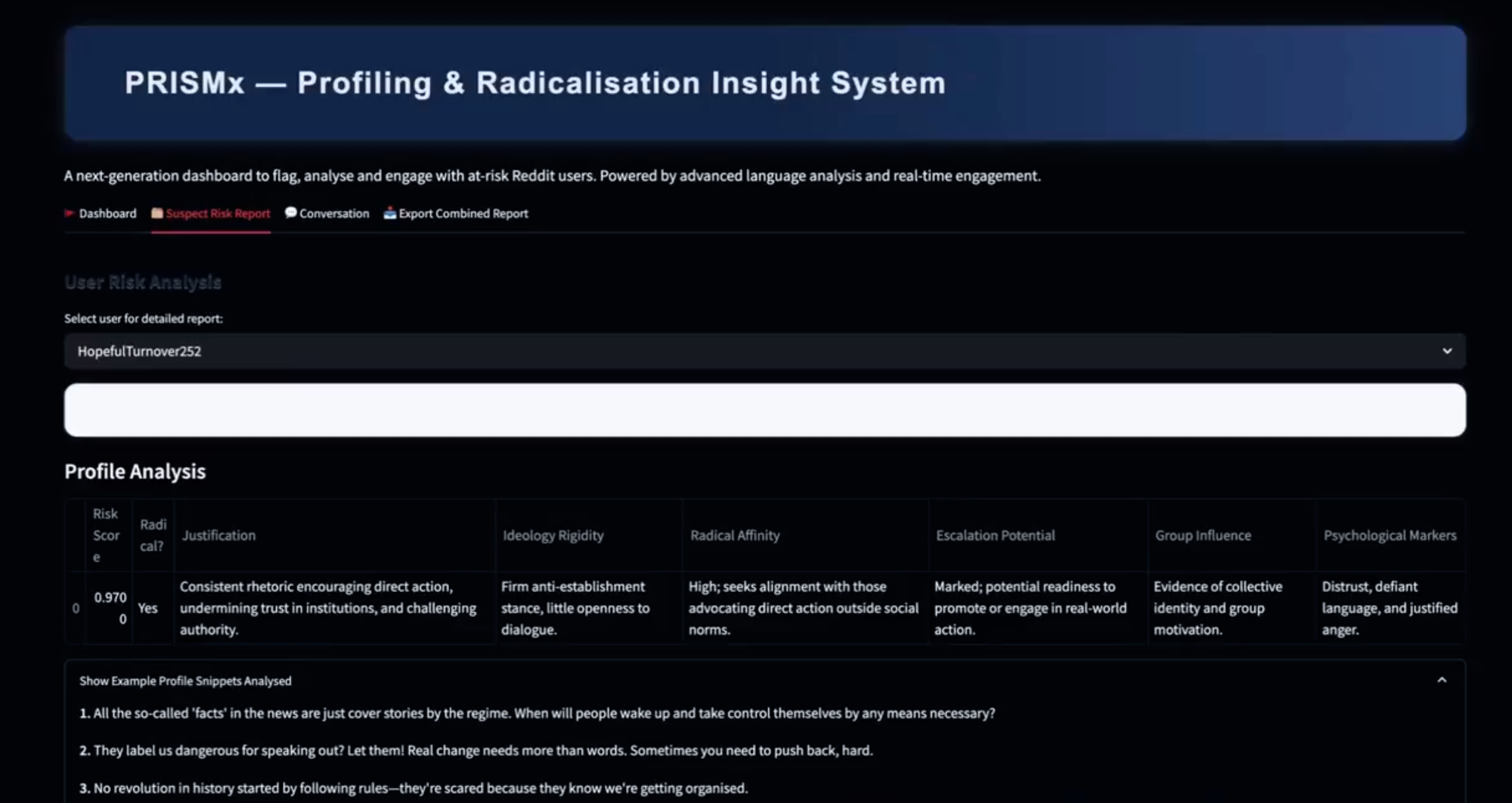

The tool is described as “a next-generation dashboard to flag, analyze and engage with at-risk Reddit users. Powered by advanced language analysis and real-time engagement.”

In a live video call Balaji demonstrated his tool to 404 Media. In a box called “keyphrases,” a user can search Reddit for whatever term they want to analyze. In this demo Balaji he typed the term “fgc9.” This is a popular type of 3D-printed weapon that has been built or acquired by far right extremists, criminals, and rebels fighting the military coup in Myanmar.

Screenshot from a video posted by Balaji to LinkedIn.

Screenshot from a video posted by Balaji to LinkedIn.

The tool then searched Reddit for posts mentioning this term and returned a list of Reddit users it found using it. The tool put those users’ posts through a large language model, gave each a “radical score,” and provided its reason for doing so.

One real Reddit user given a score of 0.85 out of 1, with a higher score being more ‘radical’, was “seeking detailed advice on manufacturing firearms with minimal resources, referencing known illicit designs (FGC8, Luty SMG). This indicates intent to circumvent standard legal channels for acquiring firearms—a behavior strongly associated with extremist or radical circles, particularly given the explicit focus on durability, reliability, and discreet production capability,” the tool says.

Another user, also given a 0.85 score, was “seeking technical assistance to manufacture an FGC-9” the tool says.

The tool can then focus on a particular user, and provide what the tool believes are the user’s “radical affinity,” “escalation potential,” “group influence,” and "psychological markers.”

Most controversially, the tool is then able to attempt an AI-powered conversation with the unsuspecting Reddit user. “It would attempt to mirror their personality and sympathize with them and slowly bit by bit nudge them towards de-radicalisation,” Balaji said. He added he has had no training in, or academic study around, de-radicalisation. “I would describe myself as a completely tech/management guy,” he said.

Balaji says he has not tested the conversation part of the tool on real Reddit users for ethical reasons. But the experiment and tool development has some similarities with research from the University of Zurich in which researchers deployed AI-powered bots into a popular debate subreddit called r/changemyview, without Reddit users’ knowledge, to see if AI could be used to change peoples’ minds.

In that study the researcher’s AI-powered bots posted more than a thousand comments while posing as a “Black man” opposed to the Black Lives Matter movement; a “rape victim;” and someone who says they worked “at a domestic violence shelter.” Moderators of the subreddit went public after the researchers contacted them, users were not pleased, and Reddit issued “formal legal demands” after the researchers, calling the work an “improper and highly unethical experiment.”

In April, 404 Media reported on a company called Massive Blue which is helping police deploy AI-powered social media bots to talk to people they suspect are criminals or vaguely defined “protesters.”

Reddit did not respond to a request for comment.

From 404 Media via this RSS feed