I’m shocked that a guy whose wealth is based on investor hype for AI makes a statement that AI is going to be exponentially more valuable.

The guy is such a con artist. All the statements about how they fear the AI will be so smart and powerful are coming from from the AI owners. It’s bullshit, If they actually thought their AI could kill everyone, they wouldn’t be bragging about it in the press.

I love how the statement doesn’t say anything the AI will do, just that it’ll be a lot. Like it’s gotta set off people’s bullshit detector when the promise is both enormous but also very vague lol

I genuinely hope that this garbage technology falls apart at the seams and soon. Not only is it destroying the environment, but it’s becoming a large factor into brain drain amongst students and adults.

This shit is gonna fail soon and take this sham of an economy with it. the more outlandish the claims, the more guaranteed that is.

What’s sad is that people like him won’t get punished, instead they’ll get films made about what they did like with the last crash.

You are right as of today, but give it time.

Sam Altman should be tortured

Method of torture: force him to type back responses to ChatGPT queries for the rest of his life.

Please, please, we can just throw him down a mineshaft.

No. Don’t dirty the earth with his presence.

“AI will make it so that decades happen, every week” - Vladimir Linux

If the AI were really as effective as the AI companies are saying, they’d use it for themselves instead of selling it at a loss

What kind of dweeb says “a few thousand days away” instead of “in a couple of years”?

investor brain: “days are shorter than years so it’s closer”

I highly highly doubt it. It is really good for the main thing it is designed for: language and translation. And because of it’s function it can hallucinate for you decent code or answers to basic issues.

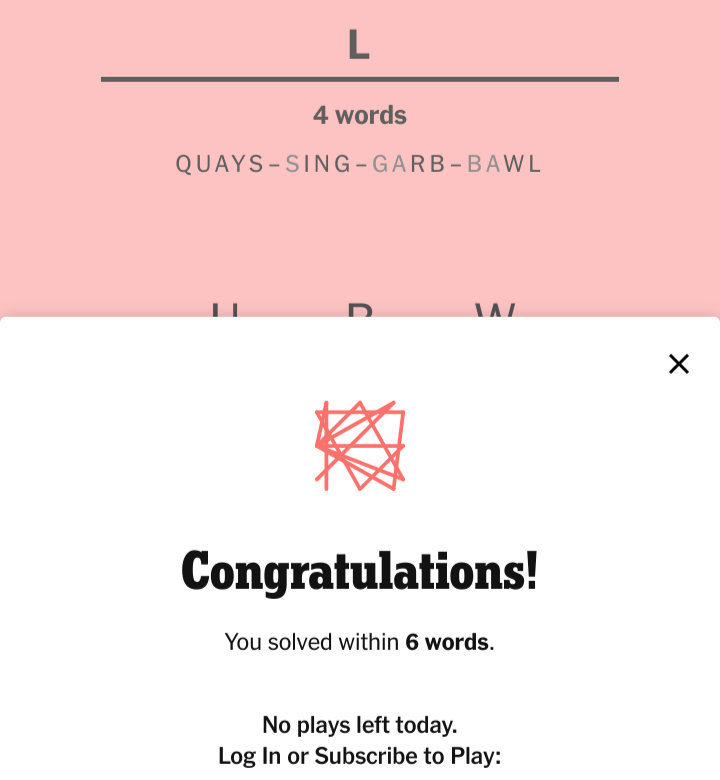

Try something, I’ve done this personally. Go to any of the big “AI” models right now. In another tab, open up the NYT games, letter boxed. Now try and teach the “AI” to play letter boxed. It isn’t a complicated game but the few restrictions you do have are hard restrictions. For a language model coming up with words that fit the restriction and giving you a solution should be pretty straightforward if it is truly smart. Just a list of a few words that meet the criteria of the game that will solve today’s puzzle is all it needs to do. Do it. See what happens.

EDIT: Been a few weeks since I last tried. Just tested it on 3 big models. All failed. The answers almost look right for some of them. But they fail. Here was my input:

You will solve a word puzzle. All rules must be followed all the time. There will be four arrays of letters labelled 1, 2, 3, 4. The first word may start with any letter from any of the arrays. A word must be in the English language. A word can contain more than one letter from any array. A word can not contain two letters next to each other from the same array. A word may not contain the same two letters next to each other. After the first word is chosen the next word must contain the last letter of the previous word. All letters from the arrays must be used at least once. A solution should contain less than 5 words. 1: E, T, N; 2: O, S, B; 3: W, H, U; 4: V, A, L; Solve this word puzzle using the rules and letter arrays as stated.Go to any LLM and have it list every US state with the letter “R” in it. Be amazed at its idiocy.

A word can not contain two letters next to each other from the same array.

I think this is ambiguously phrased. Does this mean that a word can’t contain two letters, regardless of their position in the word, that are next to each other in a given array? or does it mean that the word can’t have two letters neighboring each other in the word that are from the same array? For example the word EAT has E and T from array 1 and they are neighboring in the array but not in the word. Is it valid or invalid?

It doesn’t matter. The “AI” will fail just about any and all of the criteria as you go through iterations. Try it yourself. It will even fail BOTH of your examples of what that can mean, which is a feat a human would not do. A human would choose one and stick with it.

i wasnt asking because i care about the ai, im asking because i was trying to solve the puzzle and wasnt sure lol

So a better way to phrase that “rule” might be:

Two letters from the same array may not be next to each other in a word.

This could only be interpreted in one way by a human. If you tried to interpret it in thew way that two letters may not be next to each other in the array, well, that doesn’t make sense. As soon as you have more than 1 letter in an array they will be next to other letters in the array. So it could only be interpreted as regarding the placement of the letters in the chosen word(s) for the solution.

your instructions were intriguing, so I clicked the link for the game and got sucked in

(contains answer)

that was fun! I’ve never played anything like that before, thank you for the link ❤️

We got another one!

Why did it spell LT. VAUSH W. BONE

Why did it spell LT. VAUSH W. BONEThat is so fascinating. I just tried it with the most recent version of g pee t and it failed even after I tried to correct it multiple times

So does Microsoft’s and the other big ones. They each fail in their own way.

Sam Altman says a lot of things.

Never read anything this guy has ever said that shouldn’t be responded to with “haha okay dork”

I’m starting to think he’s actually stupid enough to think LLMs are intelligent

deleted by creator

But nothing happens in decades

deleted by creator

G I V E

M E

Y O U R

M O N E Y

D R I N K

M O R E

O V A L T I N E